Explaining remote function, the newest SageMaker SDK functionality

One decorator is all we need?

SageMaker Python SDK has just introduced a handy decorator @remote that lets you run a particular Python function as a SageMaker Job. This way, theoretically speaking, you can easily migrate your existing Python data science code to fully-fledged SageMaker jobs in minutes.

Previously, if you wanted to run something on SageMaker machines, you had to wrap that something with a SageMaker Job. The process was simple, but required some effort to create required SageMaker SDK objects in a proper way.

Now, all you have to do is this:

from sagemaker.remote_function import remote

@remote(instance_type="ml.m5.16xlarge")

def divide(x, y):

print(f"Calculating {x}/{y}")

return x / y

divide(3, 2)Wrap your existing code with a decorator, configure it if necessary (instance_type="ml.m5.16xlarge") and voilà. Your function will now run on a beefy 64 vCPU, 256 GB RAM machine whenever executed. You can configure not only the instance type, but also the role assumed by SageMaker, the environment variables, VPC setup and many more.

What is particularly interesting is the fact that you can use it as a simple way to execute small pieces of code on beefy machines, while still developing most of the stuff locally. For example, the code below will run one function in SageMaker and one locally (where locally means “where the current Python interpreter runs”):

from sagemaker.remote_function import remote

# this will spin up a beefy machine

@remote(instance_type="ml.m5.16xlarge")

def divide(x, y):

print(f"Calculating {x}/{y}")

return x / y

# this will run locally

def multiply_by_two(x):

print(f"Multiplying {x} by 2")

return x * 2

# res_one will be an usual Python floating-point number!

res_one = divide(3, 2)

res_two = multiply_by_two(res_one)

res_two

>>> 3The results of the SageMaker-powered function are available as usual local Python variables.

This works both ways - you can send most Python objects like Pandas DataFrames back and forth between local environment and SageMaker.

How does it work? The deep dive.

There’s no magic, just several thousand lines of Python code in the SageMaker library.

TL;DR: Some Bash and Python scripts running OS commands on SageMaker Training Jobs with a sprinkle of cloudpickle, S3Downloader and S3Uploader!

LOCAL environment prepares everything for SageMaker (and saves that to S3)

SAGEMAKER loads its commands (function to execute and some helpers) via S3, executes it and saves the result to S3

LOCAL environment loads the result back from S3

Slightly more detailed version:

Lets start with the fact that the wrapped function is run as a …SageMaker Training Job! This is slightly weird and inconsistent decision, as most of the “one-off jobs” are traditionally implemented as SageMaker Processing Jobs. The reason is probably the native integration of Training Jobs with SageMaker Experiments, something that example docs of remote function seem to encourage.

Training Jobs are usual SageMaker-compatible Docker containers, but with a special entrypoint running a Shell script that prepares the execution environment inside the container (i.e. it installs SageMaker SDK and any custom dependencies) and then runs invoke_function script from SageMaker SDK.The

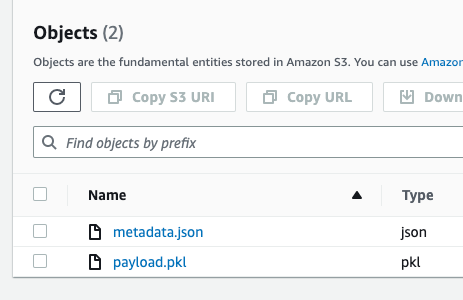

invoke_functionthat runs in the Training Job, deserializes the function code and its inputs (usingcloudpickleand S3Downloader), invokes it as a regular Python function and then saves the results back to S3. You can even browse the bucket to see that.

Do note that you can only seralize and deserialize stuff that Pickle supports (see "Input and output for the remote function" section in the documentation).

All that process is handled with the decorator

@remote:first, it serializes all the necessary stuff for the function to be executed

then, it sets up a SageMaker Training Job with proper interiors

then, it runs the job and waits until it completes

finally, it loads the outputs and deserializes it back into local environment

The blue arrows are running locally while the red ones are running inside a SageMaker Training Job.

Who is the functionality for?

According to the introductory blog post, this was made so that people new to SageMaker can run their training / exploratory data analysis code on the platform without investing too much time into learning SageMaker itself.

But even if you’re an advanced SageMaker user, sometimes you want to run a piece of code on a powerful machine - this might be exactly THE way to do it right now. Just add @remote and that’s it.